SK Hynix expects the global market for high-bandwidth memory (HBM) — a specialised DRAM designed for artificial intelligence — to grow by 30% annually until 2030, according to Choi Joon-yong, the company’s head of HBM business planning. The projection reflects strong AI-driven demand despite concerns about pricing pressures in the traditionally commoditised chip sector.

Choi said rising capital expenditure from major cloud providers such as Amazon, Microsoft, and Google will likely boost HBM demand further. The technology, which stacks chips vertically to save space and reduce power consumption, is increasingly critical for processing the massive data loads of complex AI applications. SK Hynix anticipates the HBM market could reach tens of billions of dollars by 2030.

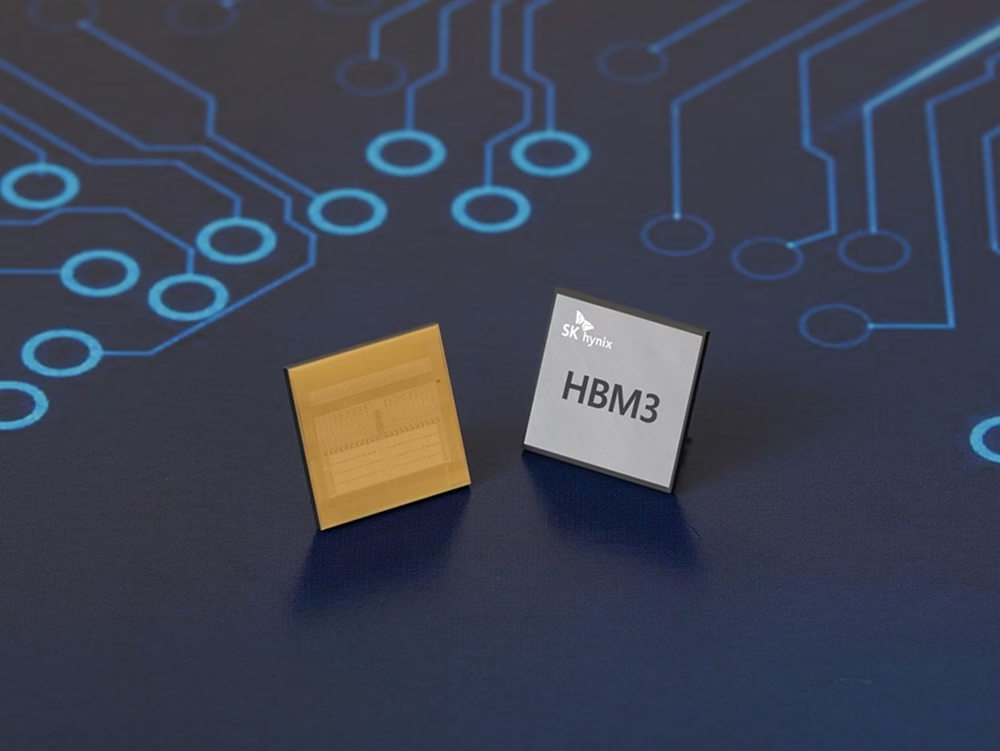

Next-generation HBM4 chips, built with customer-specific “base dies” for better memory management, are making products less interchangeable between suppliers. While SK Hynix currently provides tailored solutions mainly to large clients like Nvidia, Choi expects broader customisation trends in the future.

SK Hynix is Nvidia’s primary HBM supplier, with Samsung Electronics and Micron Technology contributing smaller volumes. Although Samsung recently warned that HBM3E supply could outpace short-term demand, Choi expressed confidence in SK Hynix’s competitiveness.

On trade policy, U.S. President Donald Trump announced plans for a 100% tariff on chips imported from countries without U.S. manufacturing operations, though details remain unclear. South Korea’s trade envoy said Samsung and SK Hynix would be exempt due to their U.S. investments, including SK Hynix’s planned advanced packaging and AI R&D facility in Indiana.

Shares of SK Hynix rose as much as 3.5% on Monday and are up 52.4% so far this year, outperforming Samsung’s 33.7% gain, Micron’s 41.3% climb, and the broader KOSPI’s 33.9% rise.